While mixed reality — virtual reality (VR) and augmented reality (AR) — has come a long way in recentyears, there are still a lot of challenges that developers struggle to overcome in a practical and user-friendly way.Typing is one example and current virtual keyboard implementations are slow and clumsy.But people already have a sense called proprioception that can help.Created by researchers at KTH Royal Institute of Technology and Ericsson Research, KnuckleBoard is an experimental keyboard interface that takes advantage of users’ proprioception.

Proprioception is your body’s sense of its own movement and position in space.It is why you have theability to close your eyes and touch your index finger to the tip of your nose on the first try.Similarly,you can probably close your eyes and touch any finger to any other finger’s knuckle.

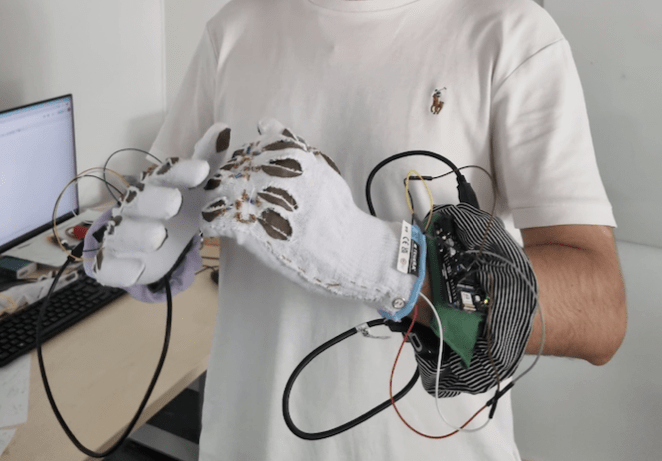

That is the key to KnuckleBoard’s functionality.With special gloves that make each knuckle a touchbutton, KnuckleBoard lets users type by tapping on their own knuckles.That is easy to do, even whenyour can see your own hands, such as when you’re wearing a VR headset.

With an input scheme similar to traditional chorded keyboards, KnuckleBoard allows for full alphanumeric typing.The prototype KnuckleBoard device consists of two gloves (one for each hand) that have conductivepads on the fingertips and the two sets of knuckles closest to the hand.An Arduino UNO WiFi Rev2board monitors those pads through analog pins.

Each pad has a unique resistor, so theArduino can identify the touched pad by its resistance.It then communicates input data wirelessly to asecond UNO WiFi connected to Unity, which runs the virtual environment.That environment is a simple notepad for this demonstration, but the functionality could beimplemented into other VR and AR software (and even games) to provide a better typing experience.

In testing, users found that they could type well with KnuckleBoard even while walking.