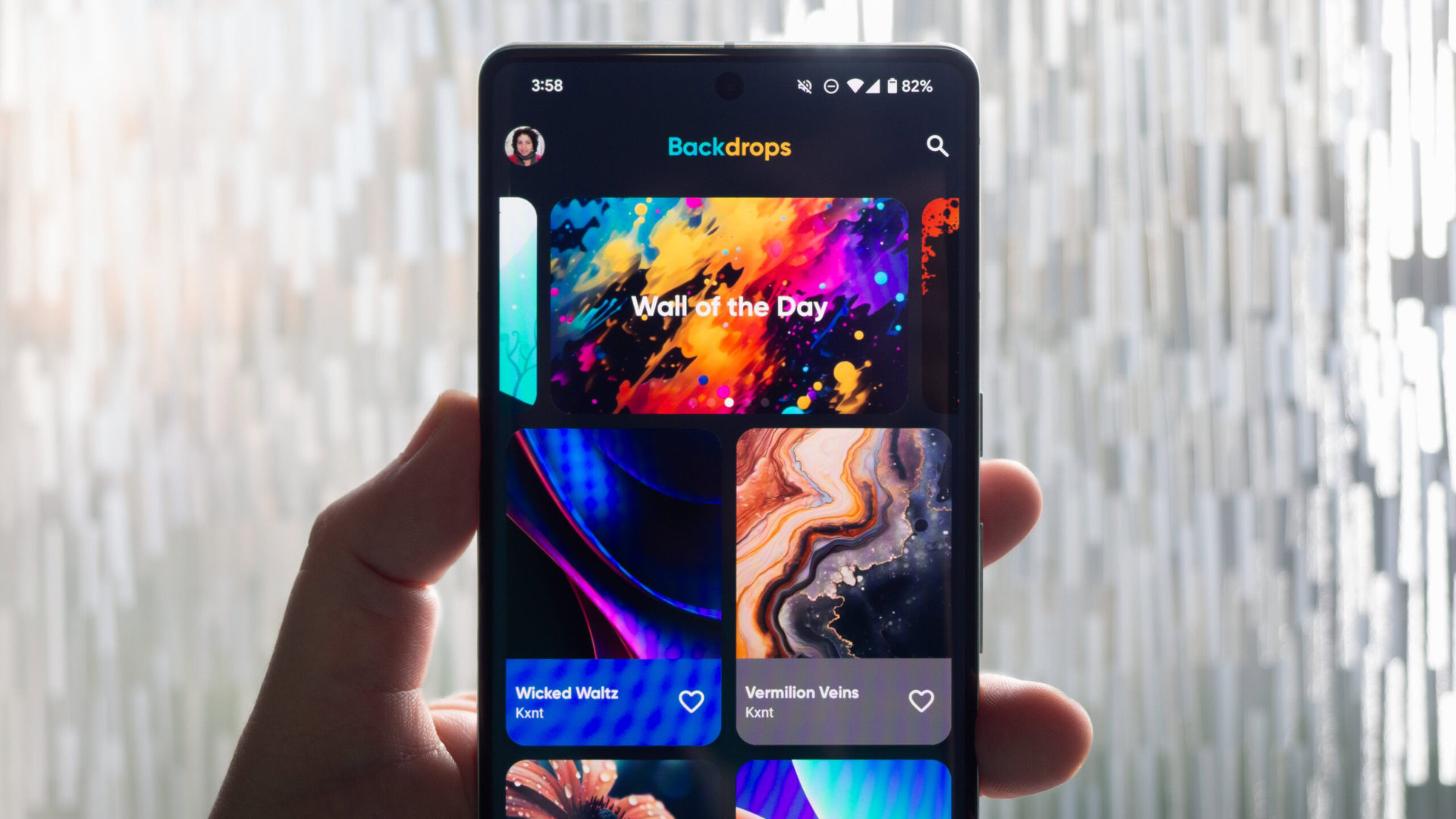

A new Apple-backed study, in collaboration with Aalto University in Finland, introduces ILuvUI: a vision-language model trained to understand mobile app interfaces from screenshots and from natural language conversations.Here’s what that means, and how they did it.ILuvUI: an AI that outperformed the model it was based on In the paper, ILuvUI: Instruction-tuned LangUage-Vision modeling of UIs from Machine Conversations, the team tackles a long-standing challenge in human-computer interaction, or HCI: teaching AI models to reason about user interfaces like humans do, which in practice means visually, as well as semantically.

Currently, as the researchers explain, most vision-language models are trained on natural images, like dogs or street signs, so they don’t perform as well when asked to interpret more structured environments, like app UIs: With that in mind, the researchers fine-tuned the open-source VLM LLaVA, and they also adapted its training method to specialize in the UI domain.They trained it on text-image pairs that were synthetically generated following a few “golden examples”.The final dataset included Q&A-style interactions, detailed screen descriptions, predicted action outcomes, and even multi-step plans (like “how to listen to the latest episode of a podcast,” or “how to change brightness settings.”) Once trained on this dataset, the resulting model, ILuvUI, was able to outperform the original LLaVA in both machine benchmarks and human preference tests.

What’s more, it doesn’t require a user to specify a region of interest in the interface.Instead, the model understands the entire screen contextually from a simple prompt: How will users benefit from this? Apple’s researchers say that their approach might prove useful for accessibility, as well as for automated UI testing.They also note that while ILuvUI is still based on open components, future work could involve larger image encoders, better resolution handling, and output formats that work seamlessly with existing UI frameworks, like JSON.

And if you’ve been keeping up to date with Apple’s AI research papers, you might be thinking of a recent investigation of whether AI models could not just understand, but also anticipate the consequences of in-app actions.Put the two together, and things start to get… interesting, especially if you rely on accessibility to navigate your devices, or just wish the OS could autonomously handle the more fiddly parts of your in-app workflows.External drive deals on Amazon Seagate Portable 2TB HDD, USB 3.0: $79.99 SanDisk 2TB Extreme Portable SSD, USB-C: $134.99 (was $209.99) Samsung T7 1TB Portable SSD, USB 3.2 Gen 2: $89.99 (was $129.99) WD 5TB Elements Portable External HDD, USB 3.2 Gen 1: $123.99 (was $139.99) You’re reading 9to5Mac — experts who break news about Apple and its surrounding ecosystem, day after day.

Be sure to check out our homepage for all the latest news, and follow 9to5Mac on Twitter, Facebook, and LinkedIn to stay in the loop.Don’t know where to start? Check out our exclusive stories, reviews, how-tos, and subscribe to our YouTube channel